Compressing Cache Data

Let’s say you have a CPU usage problem in your cache machine, in our example we’ll use Redis.

- Slow operations. In this case, you just find them from slowlog.

- You are using it too much. Everytime you use, there will be a TCP connection(probably there will be a connection pool though). You’ll be writing and reading data, everything you do has a cost, but even though it can easily handle way a lot of operations very fast, the more the CPU usage gets higher, the slower it will be.

Quote from the book Amazon Web Services in Action, Third Edition

When you go from 0% utilization to 60%, wait time doubles. When you go to 80%, wait time has tripled. When you to 90%, wait time is six times higher, and so on. If your wait time is 100 ms during 0% utilization, you already have 300 ms wait time during 80% utilization, which is already slow for an e-commerce website.

So the point is, do not be like “unless it hits %100, it’s fine”. It better stay low. So what can you do?

- Solve your slow operations. For instance, instead of DEL you can use UNLINK when you’re deleting big or a lot of data.

- Have a better machine. Most of the time it works, however in case of Redis, considering it’s single-threaded, it probably won’t help much.

- Compress your data, the less data will come and go out, so the faster it will be.

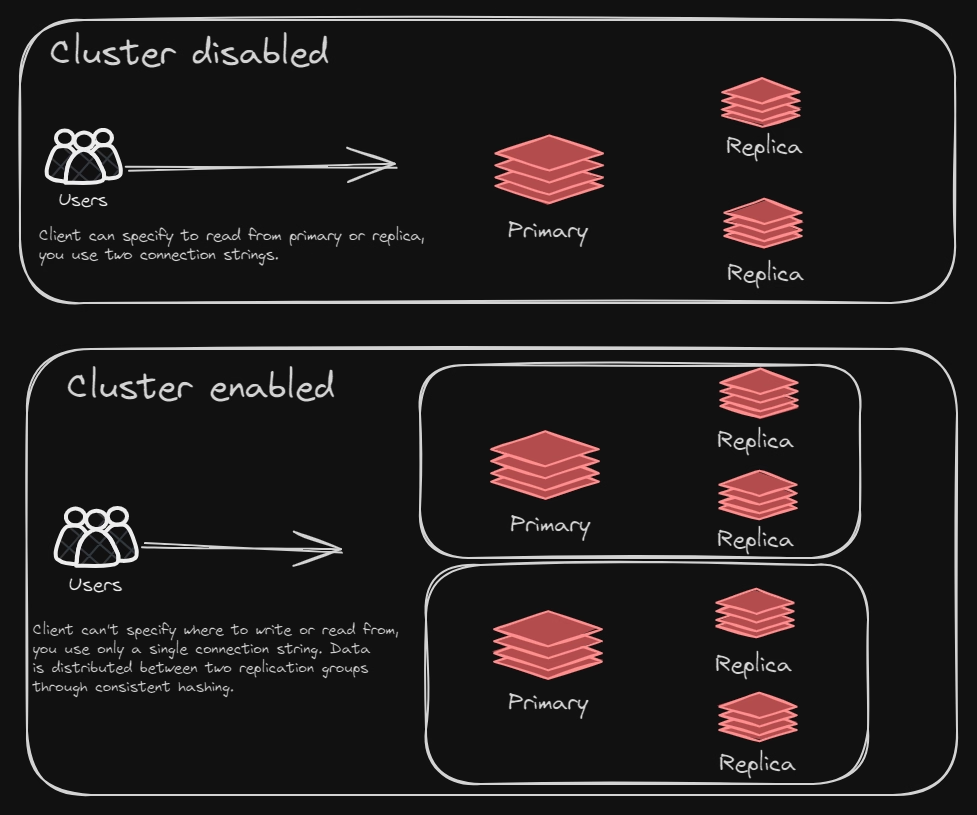

- Use replication. You can direct your reads to your replications. Beware of consistency issue, but most of the time, it won’t be a problem.

- Enable cluster mode. You can just divide the workload between multiple machines, therefore the instance will have less work to do.

Now, we’ll explore the third option. We’ll use two things, protobuf-net for serialization(don’t forget adding the necessary attributes in your classes), brotli for compression.

I wrote these extension metdos to use before & after calling Redis. The methods here also have async counterpart, but I felt like async in this case is a little unnecessary overhead. We have for both bytes and strings seperatetly because for Hashmaps in Redis requires string value.

[ProtoContract]

public class Person

{

[ProtoMember(1)]

public string Name { get; set; }

// Beware, if you don't initialize your collections by default

// Then when you compress and decompress, your empty collections will be null.

// If nulls aren't a problem you don't need to include []

[ProtoMember(2)]

public List<Person> Friends { get; set; } = [];

}

public static class CompressionExtensions

{

public static byte[] CompressAsBytes<T>(T data)

{

using var stream = new MemoryStream();

Serializer.Serialize(stream, data);

return CompressByBrotli(stream.ToArray());

}

public static T DecompressFromBytes<T>(byte[] data)

{

var cacheDecompressedData = DecompressByBrotli(data);

using var stream = new MemoryStream(cacheDecompressedData);

return Serializer.Deserialize<T>(stream);

}

public static string CompressAsString(string data)

{

using var memoryStream = new MemoryStream();

Serializer.Serialize(memoryStream, data);

var compressedData = CompressByBrotli(memoryStream.ToArray());

return Convert.ToBase64String(compressedData);

}

public static string DecompressFromString(string data)

{

var decodedData = Convert.FromBase64String(data);

var decompressedData = DecompressByBrotli(decodedData);

using var stream = new MemoryStream(decompressedData);

return Serializer.Deserialize<string>(stream);

}

private static byte[] CompressByBrotli(byte[] data)

{

using var input = new MemoryStream(data);

using var output = new MemoryStream();

using var stream = new BrotliStream(output, CompressionLevel.Optimal);

input.CopyTo(stream);

stream.Flush();

return output.ToArray();

}

private static byte[] DecompressByBrotli(byte[] data)

{

using var input = new MemoryStream(data);

using var output = new MemoryStream();

using var stream = new BrotliStream(input, CompressionMode.Decompress);

stream.CopyTo(output);

stream.Flush();

return output.ToArray();

}

}

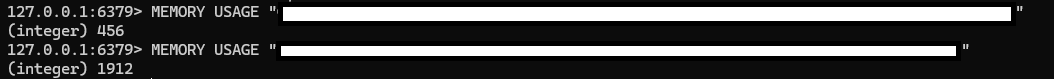

Result

As an example, it reduced one of our data lesser than its quarter. I haven’t tested extensively, but from my small tests I can say that, it’s better when data is bigger, I saw big decreases wherever I looked at. After implementing these, I saw around %15-20 decrease on Redis Engine CPU Utilization as well.